Guide to Using CLI Proxy API to Optimize AI Usage Costs

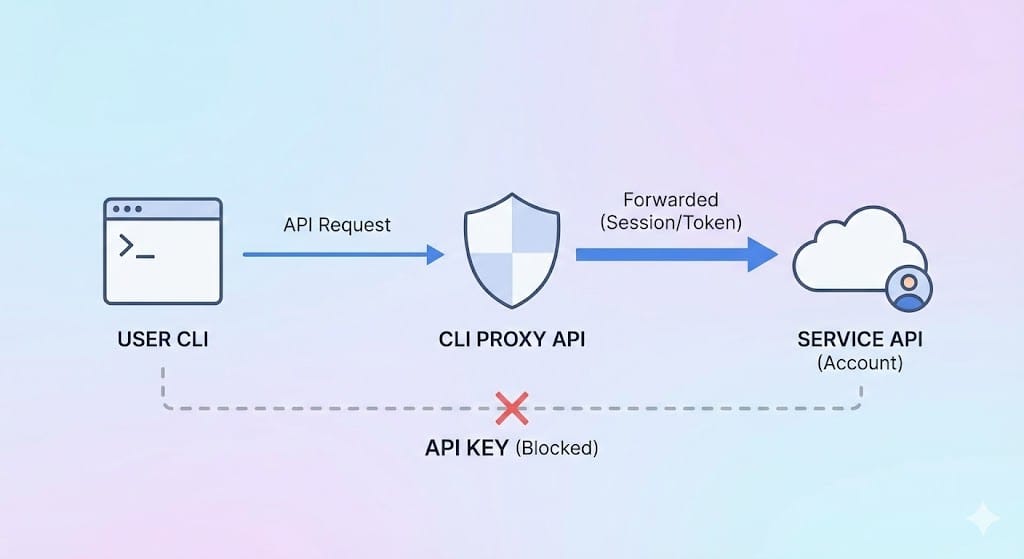

The current issue is that many providers such as Google, OpenAI, and Claude separate regular subscription plans from API calls, especially calls via CLI tools (using AI from the terminal). This leads to a situation where you cannot really optimize your monthly spending across all your different tasks. This project was created to help solve that problem by simulating an environment so you can forward all requests—whether from the CLI or from an API (such as n8n)—through your own account to take full advantage of the plan you have purchased.

What is CLI Proxy API?

CLI Proxy API acts as a "proxy server that connects CLI models with an API setup", making them compatible with platforms like OpenAI, Gemini, and Claude. Instead of manual terminal commands, developers can use standard API requests.

Main Features:

- Multi-Platform Support: Compatible with OpenAI, Gemini, and Claude endpoints.

- Flexible Responses: Supports both streaming (real-time) and non-streaming output.

- Smart Tools: Supports function calls and tool integrations.

- Image Support: Accepts image input in addition to text.

- Multi-Account Management: Handles multiple accounts simultaneously with load balancing.

How to Install CLI Proxy API

Installation Steps

Clone the Repository

Open the terminal and run:

git clone https://github.com/luispater/CLIProxyAPI.git

cd CLIProxyAPI

go build -o cli-proxy-api ./cmd/server

This command creates the cli-proxy-api file in your directory.

Or for MacOS users, just do this for convenience

Compile the source code into an executable file:

brew install cliproxyapi

brew services start cliproxyapi

If you run into some weird copy/paste bug, just ask an AI for more guidance or chat with the AI in the corner of my screen and it will guide you.

How to Configure CLI Proxy API: Basic Steps

Step 1: Log In

Authenticate using your Google account:

./cli-proxy-api --login

Step 2: Start the Server

Start the proxy server (default port is 8317):

./cli-proxy-api

Step 3: Make an API Call

Start a Chat:

Send a POST request to http://localhost:8317/v1/chat/completions

{

"model": "gemini-2.5-pro",

"messages": [

{

"role": "user",

"content": "Hello, how are you?"

}

],

"stream": true

}

List Available Models:

GET http://localhost:8317/v1/models

Customizing CLI Proxy API

Configuration is managed via a YAML file (default: config.yaml). To specify a different file:

./cli-proxy-api --config ~/CLIProxyAPI/config.yaml

Wherever you cloned this project earlier, go there to edit the file; or if you installed via brew, just use the above command.

Sample config.yaml:

port: 8317

remote-management:

allow-remote: true

auth-dir: "~/.cli-proxy-api"

debug: false

proxy-url: ""

quota-exceeded:

switch-project: true

switch-preview-model: true

api-keys:

- "your-api-key-1"

- "your-api-key-2"

generative-language-api-key:

- "AIzaSy...01"

- "AIzaSy...02"

Advanced Features

You can also deploy this on Docker on a VPS server for convenient access from multiple computers. You can see more details at

However, I recommend installing it on your own machine for security and easier authentication (granting access) to your accounts. After that, download the auth file and upload it to the environment where you deploy via Docker—that’s the easiest way.

Docker Deployment

Log In:

docker run --rm -p 8085:8085 -v /path/to/your/config.yaml:/CLIProxyAPI/config.yaml -v /path/to/your/auth-dir:/root/.cli-proxy-api eceasy/cli-proxy-api:latest /CLIProxyAPI/CLIProxyAPI --login

Start the Server:

docker run --rm -p 8317:8317 -v /path/to/your/config.yaml:/CLIProxyAPI/config.yaml -v /path/to/your/auth-dir:/root/.cli-proxy-api eceasy/cli-proxy-api:latest

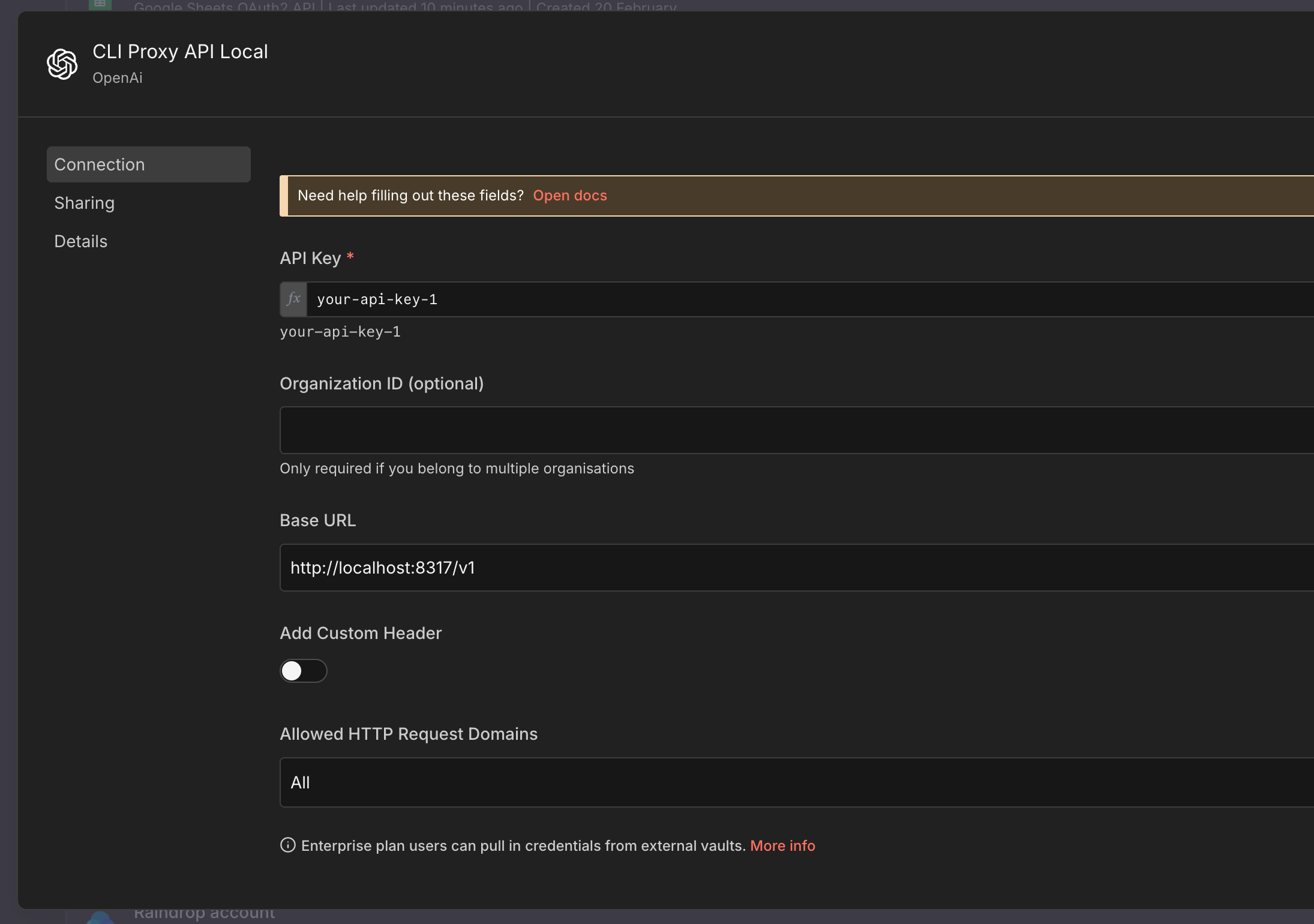

Adding CLI Proxy API to n8n

Currently, no matter which AI model you use, you still have to use OpenAI and the OpenAI nodes to configure it, because only these support the protocol the project author is leveraging.

If your n8n instance is hosted on a VPS (i.e., outside your LAN), you need to expose CLI Proxy API via a domain for connection. Or if you access it within a LAN but the n8n machine and the Proxy machine are different, you also need to enter the correct IP address of the proxy server in the Base URL step.

After that, just plug it into the AI nodes to chat as usual; sending images still works as well.

Using It to Generate Images

This part is a bit more complex. You can only do it via an HTTP Request node with the following settings:

POST method: https://your-proxy-domain/v1/chat/completions

Remember to replace this with your proxy domain link or local IP.

Send Header:

AuthorizationBearer your-api-key-1

Body:

{

"model": "gemini-2.5-flash-image",

"messages": [

{

"role": "user",

"content": "{{ $json.prompt }}"

}

],

"modalities": ["image", "text"],

"image_config": {

"aspect_ratio": "16:9"

}

}

After receiving the response, the image will be in base64 format, so you need an additional intermediate conversion step. Below I’m sharing a sample workflow; you can copy the code and paste it directly into your workflow in n8n to understand how it works.

Sample Workflow (containing HTTP request and conversion):

{

"nodes": [

{

"parameters": {

"method": "POST",

"url": "https://your-proxy-domain/v1/chat/completions",

"sendHeaders": true,

"headerParameters": {

"parameters": [

{

"name": "Authorization",

"value": "Bearer your-api-key-1"

}

]

},

"sendBody": true,

"specifyBody": "json",

"jsonBody": "={\n \"model\": \"gemini-2.5-flash-image\",\n \"messages\": [\n {\n \"role\": \"user\",\n \"content\": \"{{ $json.prompt }}\"\n }\n ],\n \"modalities\": [\"image\", \"text\"],\n \"image_config\": {\n \"aspect_ratio\": \"16:9\"\n }\n} ",

"options": {}

},

"type": "n8n-nodes-base.httpRequest",

"typeVersion": 4.3,

"position": [

1152,

512

],

"id": "28028c22-8042-43b3-8e87-7cbe0d4bb3e6",

"name": "Generate Image"

},

{

"parameters": {

"operation": "toBinary",

"sourceProperty": "base64Data",

"options": {}

},

"type": "n8n-nodes-base.convertToFile",

"typeVersion": 1.1,

"position": [

1568,

512

],

"id": "685cf2c3-ee62-4b76-8602-bc30a165dc1a",

"name": "Convert to File"

},

{

"parameters": {

"jsCode": "const dataUrl = $json.choices[0].message.images[0].image_url.url;\nconst base64Data = dataUrl.replace(/^data:image\\/\\w+;base64,/, '');\nreturn { base64Data };"

},

"type": "n8n-nodes-base.code",

"typeVersion": 2,

"position": [

1360,

512

],

"id": "c1032c53-d09d-4eef-9779-faff6752f8d0",

"name": "Get base64"

}

],

"connections": {

"Generate Image": {

"main": [

[

{

"node": "Get base64",

"type": "main",

"index": 0

}

]

]

},

"Convert to File": {

"main": [

[]

]

},

"Get base64": {

"main": [

[

{

"node": "Convert to File",

"type": "main",

"index": 0

}

]

]

}

},

"pinData": {},

"meta": {

"instanceId": "87a32b77896263bd56ebc621c5133167cbeee66743c9f89999f622d0ecb8f05e"

}

}

Adding CLI Proxy API to Claude Code, Codex, Gemini CLI

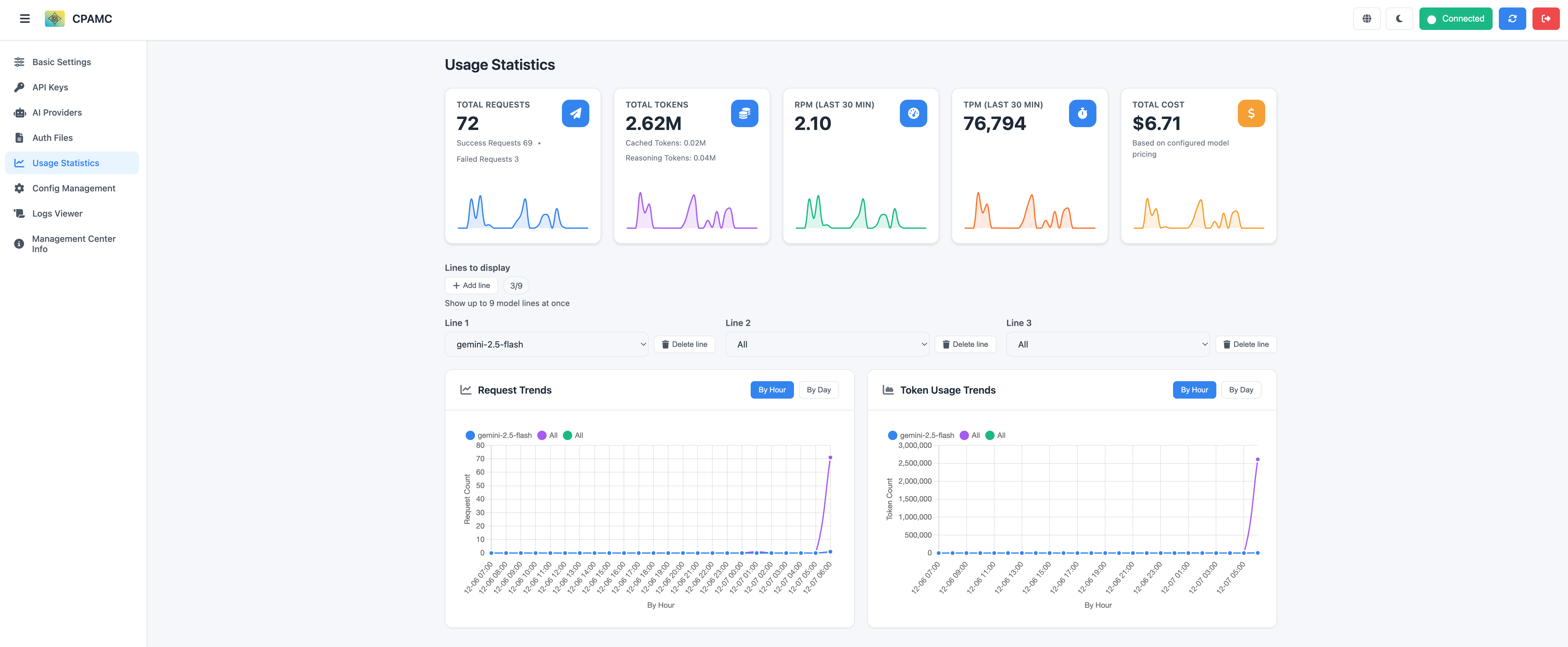

Everything is already documented here; I mostly use Claude Code with Gemini models to save costs.

Wishing you success!